I built a desktop robot capable of communicating through artificial intelligence. The system incorporated animatronic eyes that tracked the general direction of a speaking individual and employed a repurposed CRT television to visualize speech waveforms. Throughout this process, I learned about integrating AI capabilities into robotic systems, gained insights into mechatronics and user interface design, and refined my approach to sourcing materials and managing complex assemblies.

Component Sourcing and Broader Experiences

Acquiring components of exceptionally tiny CRT televisions led me to places I wouldn’t usually visit, such as Chor Bazaar. I discovered 5.5-inch and 5-inch CRT sets from local sellers, gaining a new appreciation for how these markets operate and forging connections that could prove valuable for future projects. Similarly, for the hyper-realistic eye finish, I collaborated with professionals in the film industry who specialize in resin applications. Observing their work broadened my understanding of material properties and finishing techniques.

Mechanical Assembly and Structure

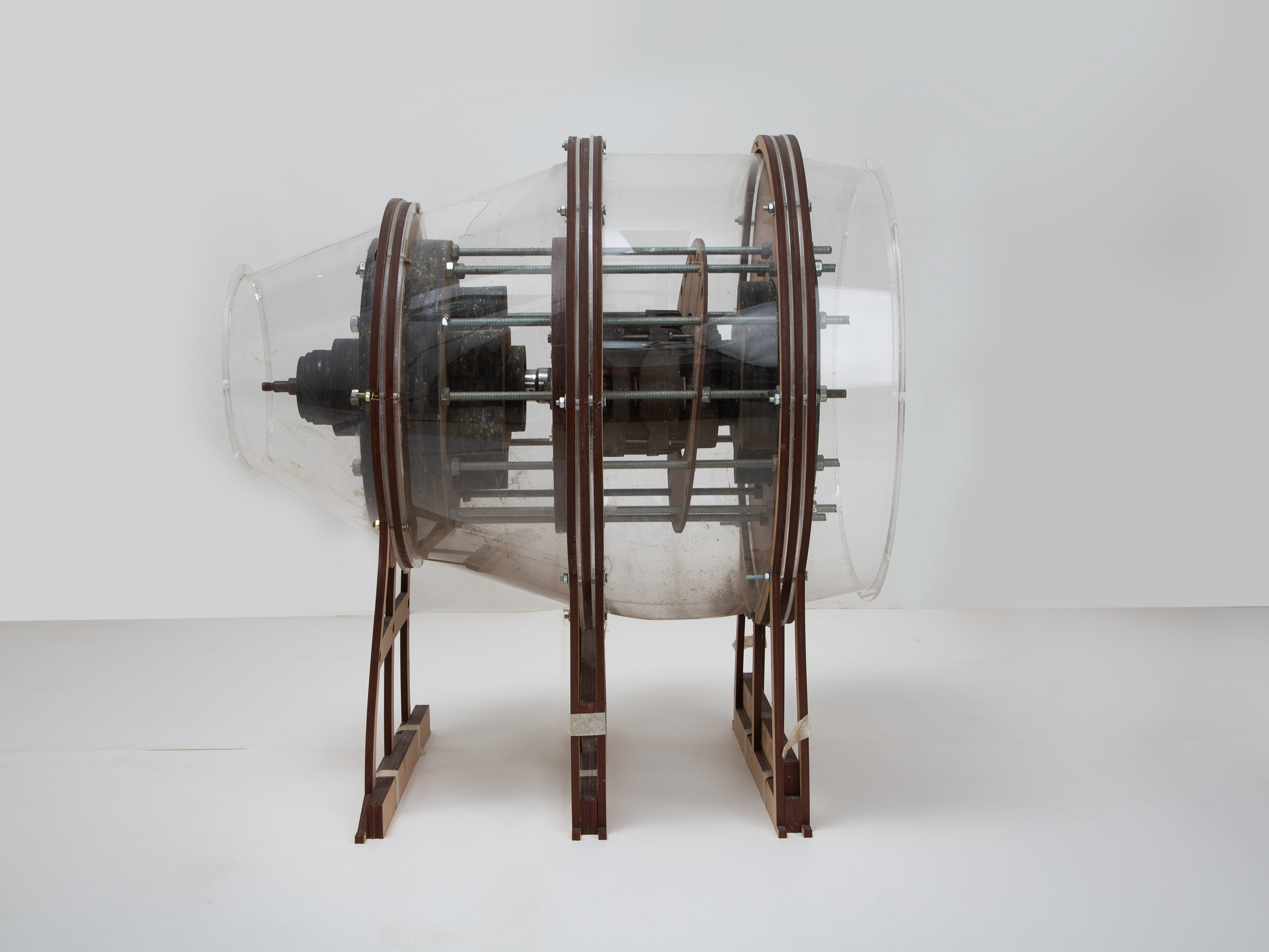

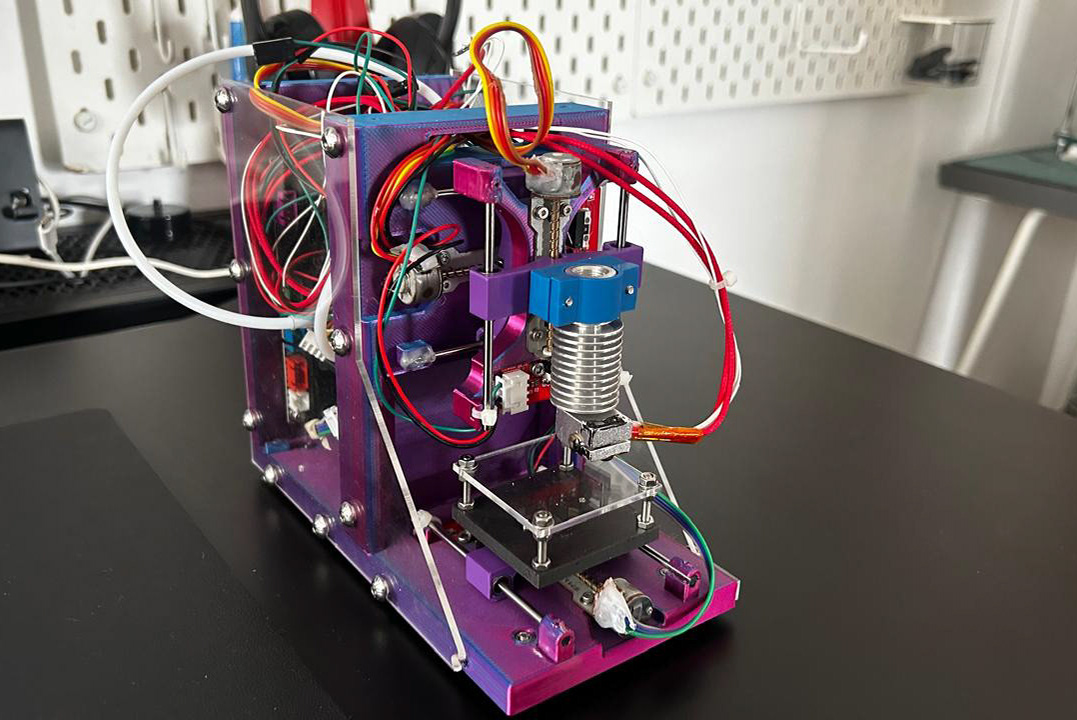

The robot’s mechanical assembly consisted of four laser-cut acrylic layers stacked with nylon standoffs and stainless-steel threaded rods. The standard MG90S micro servos sourced from Robu—were mounted on 3D-printed brackets behind the eye assembly. Four servos controlled the eyelids (two per eye), one managed horizontal rotation, and one handled vertical tilt. Rubber grommets and foam pads reduced vibration transmission, while the ABS 3D-printed eyeballs, coated in epoxy resin, provided a realistic appearance.

Electronics, AI Integration, and Safety Measures

An Arduino Mega managed real-time operations like servo control and sensor input, while a Raspberry Pi 4 handled speech recognition, text-to-speech synthesis, and language inference. The Arduino and Pi communicated via a simple USB serial protocol, allowing the Pi to send high-level commands and receive sensor data. Initially, cloud-based AI services (e.g., Alexa or Google Speech-to-Text) were used, streaming audio from a USB microphone and returning responses with minimal latency. Basic caching strategies and a limited offline model helped reduce reliance on constant internet connectivity.

I employed a small microphone array and a single ultrasonic sensor to determine the speaker's location. Although not perfectly accurate, this setup allowed the robot’s eyes to orient toward the user’s general direction. The CRT was driven by an audio signal conditioned through a simple RC filter and a small transformer, creating a rough waveform corresponding to spoken responses.

Working with high-voltage components from the CRT required careful precautions. Under the guidance of an experienced electronics mentor, I discharged the tube and capacitors safely. The CRT’s internal transformer and high-voltage leads were isolated, and warning labels marked these sections. Low-voltage wiring for the servos, Arduino, and Pi was routed away from high-voltage paths, with shielded audio cables and twisted-pair servo leads minimizing electromagnetic interference.

Working with high-voltage components from the CRT required careful precautions. Under the guidance of an experienced electronics mentor, I discharged the tube and capacitors safely. The CRT’s internal transformer and high-voltage leads were isolated, and warning labels marked these sections. Low-voltage wiring for the servos, Arduino, and Pi was routed away from high-voltage paths, with shielded audio cables and twisted-pair servo leads minimizing electromagnetic interference.

User Interface and Power Management

Beyond voice interaction and eye movements, I integrated an RGB LED strip around the base platform. The LED colors indicated different states—green for listening, blue for processing, and red for errors—offering immediate visual feedback. A capacitive touch button on the front acrylic layer enabled manual wake-up or mode changes, making the system more accessible and intuitive.

A 12 V DC adapter powered the entire system. A DC-DC buck converter supplied regulated 5 V for the Arduino and servos, while a separate linear regulator ensured a clean 5 V line for the Pi and audio circuitry. The CRT ran off its dedicated transformer derived from the 12 V rail. This arrangement provided stable current distribution and prevented voltage drops under peak loads.

Reflecting on the project, I identified opportunities for future enhancements. Reducing costs could involve substituting the CRT display with a compact OLED or e-paper panel, employing more efficient servos, or transitioning to lower-cost microcontrollers or single-board computers. Fabrication strategies such as injection-molded housings or SLS printing could simplify assembly and improve robustness. AI models could run locally on smaller, optimized frameworks, eliminating the need for cloud services and enhancing responsiveness. These improvements would support the development of a more affordable, portable, and capable robot, ideally suited for introduction into the Re-Engineering Club and mass production.

This project strengthened my technical proficiencies in robotics, AI integration, and mechatronics and enriched my perspective through interactions with diverse communities, suppliers, and professionals. I gained confidence in bridging the gap between conceptual ideas and practical implementations by navigating challenges, refining designs, and iterating toward improved solutions.